Intelligence Was Born Out Of A Need To Predict The Future

- Ethan Smith

- Jul 17, 2025

- 10 min read

Updated: Jul 17, 2025

From an evolutionary perspective, it's clear that humans have made it to the top of the food chain, but what did it take for us to reach this point? Why have such a complex brain that uses a relatively substantial amount of resources for its size?

There are a number of theories as to why investing more evolutionary points in intelligence became justifiable for Homo sapiens.

The Social Intelligence Hypothesis recognizes the power of the pack and doubles down on it. While many animals have learned strength in numbers to some degree, furthering social intelligence has led to strategic advantages such as hierarchies, division of labor, extensive tribes, alliances, and enemies.

The Ecological Intelligence Hypothesis suggested that humans, a group that was often on the move, could maximize exploitation of their environments by understanding seasons, agriculture, tools, what plants are safe to eat, and beyond.

The Cultural Intelligence Hypothesis posits that initial improvements in culture and technology put pressure on needing greater learning abilities. Animals have benefitted from a set of built-in behaviors called fixed action patterns, like spiders spinning their webs, bees dancing to communicate, and salmon returning to their birthplace. Humans, on the other hand, have exchanged most fixed action patterns for greater behavioral plasticity, though at the cost of needing to learn such behaviors, trading off predefined solutions for good inductive biases to learn a myriad of solutions as needed. There are a few artifact fixed action patterns, like the Babinski reflex or lordosis. Though these are often inconsistent or only present at certain ages. In place of these, our nature instead gives us a very broad blueprint with "inductive biases" guiding our adaptations to happen in a certain manner, but not guaranteeing it; i.e., we can identify genes that are common with restlessness or aggression, but it is not often we find genes for mental traits or behaviors that have a 100% co-occurrence rate. This paper describes the process of human development as a generative model, where DNA is a hidden variable conditioning the stochastic process of human development. This is to signify the newborn human as a relatively blank slate, ready to imitate the world around them.

While all of these provide different motivations, they all suggest that having a model of the world's dynamics, perhaps a predictive model one could consult for imagining futures in order to estimate the consequences of an action, would be beneficial. A world model having observed many seasons of poor crop yield may inform how one can plan for this ahead of time. A world model that has observed creatures with large teeth attack their prey could signal danger to oneself despite never having faced the creature directly. In deciding how we treat our peers, although we do not have access to their experiences, we may use a combination of our own experiences and their observed behaviors to infer their experiences. Even before needing to consider a very complex notion of self, we could imagine a model that could run simulations forward in time and provide an average "score" denoting the benefits towards survival and health gained from a given choice of action and make decisions from there. This is a common model we use for reinforcement learning.

As our experience and knowledge of the world occur through the senses, the big 5 and many more, running simulations may involve reconstructing the senses and observations that would occur in these hypothetical scenarios. At a very low level and short timeframe, we know our perception fills in gaps in places like vision for efficiency. We need not waste processing power on still, unchanging phenomena.

Predictive processing may also provide strategic advantages for fast reactions. The time it takes for sensory signals to travel along nerves to reach the brain ultimately dooms us to live a handful of milliseconds in the past. In the fast-paced competitive world of survival, this time is non-negligible.

If you went to swat an airborne pest, and you swung at the spot you currently see it in, you've already lost. Your vision has a latency before reaching the brain, and then there's an additional latency for sending the signal to your arm to swing, a speed that can't compete with the quick reactions of the fly, its small size yielding a very short distance for neural signals to travel. A similar case can be made for a baseball player needing to hit a baseball flying through the air at 100 miles per hour. We are required to act in accordance with where things will be and not where they are.

This, at the very least, necessitates a world model that can understand general principles of movement and physics, if not one that can also guess at the future behavior of an opponent. Other lifeforms wield defenses like poison or spikes to ward off predators, though somewhere along the way, evolution decided intelligence would be man's weapon of choice. An understanding of the rules of the arena humans have found themselves in, along with motor systems allowing for nearly universal interfacing of one's surroundings, would prove to be capable of reaching the top of the food chain. Not only this, but "environmental manipulation" as a tactic compared to other strategies like greater strength or speed could allow for practically unbounded gains disproportionate to the expended energy. In other words, it’s the reason why humans eat far less than gorillas but can output greater force than gorillas through constructed machines siphoning energy from the environment. The long-term investment in brainpower began with an upfront tax but ended up allowing for far more capabilities than what would have been achieved by scaling an organism's mass and brute indefinitely. Not to mention, the larger the organism is, the longer it takes for neurotransmission through the body, decreasing reaction time.

Evolution has gone through all sorts of "metas" as to what makes an apex predator, but nothing has yet topped the ability to bend all aspects of an environment to one's will and ensure a significant home-court advantage in every battle.

Some would argue this opened doors to a new stage of evolution, a kind of meta-evolution or in-context evolution, where we now have the capabilities to substantially edit our phenotypes within a lifetime through surgeries and prosthetics. Some speak to how integrated some of our tools have become in our lives, like how a smartphone grows with us as an external database to store memories and communicate, but always within arm's reach, and with each technological advancement, becoming more embedded within ourselves. Not to forget, we are increasingly mastering the ability to edit genotypes pre-birth, now wielding control over what was once effectively chance.

While an initially challenging solution to find for evolution to find, given the cost, tradeoffs, and a number of careful steps needed for success making for a larger barrier to overcome, this should help illustrate that a strong world model and a capable, adaptable agent make for a powerful combo.

Another fascinating aspect of the evolutionary objective is the degree to which it has created numerous mesa-optimization sub-objectives

The evolutionary objective is simple. What works gets to stay; what doesn't is filtered out. In competitive scenarios with finite resources, not only does it need to work for the base environment itself, but it needs to work better than everything else. This competition introduces evolutionary sub-objectives, like what genes are best for camouflaging to hide from prey or what may allow for extra brawn. Aside from sub-objectives in the scope of optimizing a population, there are even agent-level goals that still precipitate from the original goal of survival of the fittest but in a wildly indirect and abstracted way, such as "How can I be accepted and protected by my community?", "How can I make money?", and "What clothes do I wear to impress a mate?"

The means by which we predict the future is hypothesized to be through a mechanism called Predictive Coding. Rather than a passive recorder of reality, the brain operates fundamentally as a future prediction machine.

Predictive coding is a means of processing stimuli that optimizes for efficiency. Instead of taking in and processing the entirety of incoming stimuli, we send downstream (top-down processing) a prediction that meets with the incoming stimuli (bottom-up processing). What we are left with is the residual or error between the sense and the prediction, which is what ultimately propagates to further levels in the brain. Anything we assume correctly has no need for further processing and can be "subtracted" from the incoming signal, allowing us to cheapen the workload. Thus, the more fluent the brain becomes in predicting its stimuli, the less the energy expenditure. However, to improve its predictive abilities, we need to be “wrong” initially, receive the sensory inputs as they are, and then adjust our prediction engine accordingly the next time. In learning to predict the world’s happenings before they happen, we effectively render a lossy version of the world.

Our resulting experience is then a blend between the actual input signal and our hallucinated assumptions. We don't perceive raw reality; we perceive the brain's best guess about reality, constantly refined by error signals when predictions fail. What we call perception is therefore a form of controlled hallucination. The brain constructs a model of reality based on its predictions, and this constructed model becomes our experienced reality. Thankfully, though, this belief is constantly shaped and adapted by real-world signals to become “truthful.” In a way, though, our top-down perception has the “final say” on what we experience. Recall the Yanny vs. Laurel audio phenomenon or the black-blue vs. white-gold dress. Our observations influence our interpretation, but our inner model seems to decide on how we perceive it, sometimes outside of our control.

There are all sorts of optical illusions that highlight the role of predictive coding. This one, for instance, shows how contextual clues like lighting and nearby objects influence how we perceive color.

I like to analogize the model of predictive coding to memory foam, mirrors, or the memory pin toys. The medium catches the stimuli, imprinting it onto itself, and then the medium can throw it back out. Catch and return. “Throwing it back out” typically is an internal process we use to shape our experience and imaginations; however, like other described neural networks, it is a bonafide generative process, and we can even extract these signals to retrieve the images and thoughts we have. Mimicry really may be at the root of all learning, also giving a reason why transformers have become a standard of deep learning, given their effective ability for copying information via induction heads. Neural networks quite literally store superpositions of their training examples in their weights as something of a prototype vector aimed to respond to similar examples, thus giving way to generalization.

This also relates to the Hopfield Network theories of the mind.

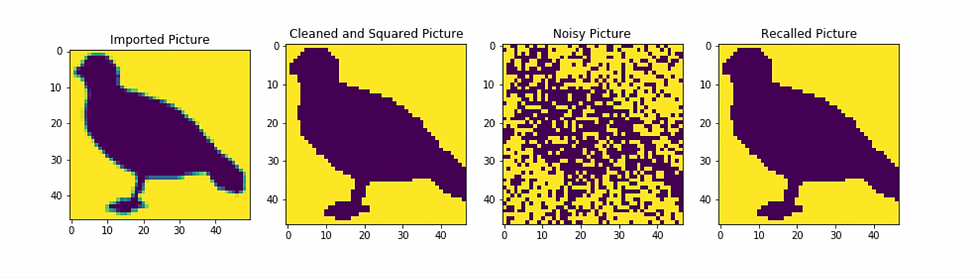

Hopfield networks are effectively “pattern-storers,” where a pattern is any combination of on/off neurons. If we allow these neurons to represent the color values of an image or a piece of text or other modalities of interest, they make for an interesting memory bank we can query. The way we query the Hopfield network is by providing a portion of one of its patterns. For instance, if we stored the image of a dog, and we provided a crop of the image, the Hopfield network would run forward in time to reconstruct the image.

By this nature, they are associative networks. They learn to associate patterns with partial observations, and through principles of energy minimization, we can store and recover the patterns.

The analogy to the brain is that we may receive an input of something similar to one of our stored patterns or a noisy input, and by association we can recall relevant memories and make connections to a given stimulus. For instance, when you hear part of a song or hear it in a novel context, like over the radio, we can search our databases for the nearest match of things stored in our memories. Or more generally, when we observe something and it triggers a memory to surface. I believe the closest functionality it mirrors is that of the hippocampus.

The picture above may also remind you of diffusion models or denoising auto-encoders with respect to their denoising capabilities, which are both very close relatives to the Hopfield network and sometimes thought of as a modernization of them.

I talk about them a bit more in this presentation and then this video does a profoundly solid job explaining how memories are effectively stored as low-energy, stable regions of brain space that we can sit in instead of darting all about, and by descending the energy gradient, we can perform recall.

Spurred on by the simple, myopic objective of energy minimization and reflecting back incoming stimuli, we are guided to produce predictive models of the world.

Put another way, it is not just evolutionarily favorable but also thermodynamically favorable to have a compressed pocket version of your environment in your mind. It's things like this that lead me to believe there is some kind of fundamental force driving the universe to eventually model itself quite faithfully.

We established on a low, biological level how this can be desirable, but what do humans do now? We chase novelty, thereby filling out incomplete regions of our world model. We write out equations to predict movement from gravity or turbulent flow, relying on a notepad and calculator where mental models cannot provide needed levels of fidelity and precision. We reward people who predict correct futures through stock markets or Polymarket. We realize our individual limitations in modeling and look to our environment to aid us in the pursuit of erasing uncertainty. We work through problems together because two heads are better than one. We collect data. Loads of it. Data has become perhaps one of the most coveted resources in the era of deep learning, next to compute. If it wasn't certain before, it is clear now that humanity demands predictability beyond what their personal brains can provide and will go so far as to fashion their environments into datacenters to do this bidding. It's sensible. With data science, marketing agencies can devise advertisements to cater to the right audiences, at the right time of day, with the right tone to maximize engagement. Though these models don't always get it right, and their simplicity can't cover much of real-world complexity. What we might really like to have is a fully faithful pocket universe where we could test out millions of simulations of advertisement deployments and see which performs best. A faithful simulation could allow us to perform drug trials rapidly, without needing to put real people at risk. It is clear how devising increasingly faithful models of our world is both incentivized for the well-being of humanity and also a place where corporations are willing to invest to get a competitive edge. We, humans and many other forms of life, are in an endless pursuit to minimize uncertainty in our world through constructing models, and a simulation of our universe appears to be intelligence’s end game. More broadly speaking, the universe is knowingly or unknowingly on a mission to simulate itself.

Comments